Capturing Organizational Knowledge:

Approaches to Knowledge Management and Supporting Technology

By

Russ Wright

Knowledge Management

Although it is said that money makes the world go around, the use of knowledge has displaced money as the primary business driver because, according to Drucker (1988), organizations discovered that organizational knowledge is the most useful tool to use to gain a competitive advantage. Identifying and leveraging knowledge held within the individual and the organization was used to increase their competitiveness (Baird, Henderson, & Watts, 1997). Over the past three decades information and the technology to support it, has grown at an explosive rate and the wealth of information available has rapidly advanced in many fields, including electronics, computers and communications technology (Adomavicius, Bockstedt, Gupta, & Kauffman, 2008). Knowledge management is the inevitable result of rapid progress in Information Technology (IT), globalization and rising awareness of the commercial value of organizational knowledge (Prusak, 2001). The existence of all this information forces organizations to find a way to handle it and transform it into actionable knowledge. Thus the problem exists not only in interpreting, distilling and sharing the information, but also efficiently turning it into knowledge.

The purpose of this document is to explore how knowledge has become the most important resource for an organization and learning is the most important capability for an organization that wants to compete in the marketplace. There is a discussion of the background on creating a competitive advantage and the importance of learning within an organization. This document also compares and contrasts the major approaches to knowledge management within an organization and examines the role that computer technology plays in capturing organizational knowledge. The conclusion finds that the field of knowledge management is still evolving and Web 2.0 technology might change the way knowledge is captured within an organization.

Background

Knowledge for A Competitive Advantage

The realization that knowledge, when organized and viewed through the lens of competitive factors, could help an organization gain a competitive advantage, formalized the beginning of knowledge management. Porter (1980) explained that the existing model of developing a business strategy was no longer working. He created a new model that brought together the ideas of the Harvard Business School and the Boston Consulting Group and created a business strategy commonly called the five forces model as displayed in figure 1 below. This model used five factors of competition as a basis for a business strategy: (1) industry competitors, (2) pressure from substitute products, (3) bargaining power of suppliers, (4) bargaining power of buyers, and (5) potential entrants. The author explained that analysis of these five areas allowed a business within a particular industry to establish themselves and react to these forces of competition and profit from them. Albers and Brewer (2003) explained that examining each of these five forces required specific knowledge within that particular competitive factor. Accordingly, knowledge management began with the need to understand the complexities of each of the five factors. Yet, the knowledge alone was not enough, as organizations had to learn from the analysis of the five factors and adapt to the ever-changing market.

Figure 1

Porter’s Five Forces Model

The Learning Organization

Possessing knowledge of competitive factors is not enough of a business strategy to make an organization competitive and profitable. Instead the organization must adapt and take advantage of opportunities to remain competitive because learning is an organization’s most important capability (Earl, 2001; Grant, 1996; Zack, 1999a). Nonaka (1991) explained that learning must be integrated into the culture of the organization and not a separate activity performed by specialists. Senge (1994) described a learning organization as a place where “people continually expand their capacity to create the results they truly desire, where new and expansive patterns of thinking are nurtured, where collective aspiration is set free, and where people are continually learning how to learn together” (p. 1). Thus, a learning organization embraces a culture where the ability to create and share new knowledge will give it a competitive advantage. Still, defining and implementing the required skills for an organization to embrace learning is complicated process.

Creating and sustaining a learning or knowledge-creating culture requires an organization to not only engage in specific activities, but also develop a new mindset. Argyris and Schon (1978) theorized that learning involved not only detecting the error and correcting it, but changing the way the organization behaves as a whole through policy change. Garvin (1993) built upon this work and created a set of activities in which learning organizations must engage to sustain a knowledge-creating culture. The author defined these activities as: (1) systematic problem solving, (2) experimentation with new approaches, (3) learning from their own experience and past history, (4) learning from the experiences and best practices of others, and (5) transferring knowledge quickly and efficiently throughout the organization. The author further explained that applying these practices is not enough, as real change must include an analysis beyond the obvious by delving into the underlying factors. Inasmuch, both learning activities and a culture change are needed to create a learning culture.

All the aforementioned work led to the creation of a field of research commonly called knowledge management. According to Alavi and Leidner (2001), knowledge management is made up of several somewhat overlapping practices that an organization can use to find, create and share knowledge that exists within individuals or processes of an organization. Another view of knowledge management defined it as “an institutional systematic effort to capitalize on the cumulative knowledge that an organization has” (Serban & Jing Luan, 2002, p. 5). Consequently, knowledge management is deeply connected to the people, procedures, and technology within an organization.

Approaches to Knowledge Management

There are different views on the definition of knowledge, which lead to the creation of multiple models of knowledge management (Carlsson, 2003). The first series of approaches to knowledge management, define knowledge as a “Justified True Belief” (Allix, 2003, p. 1). These models place knowledge into different categories (Boisot, 1987; Nonaka & Takeuchi, 1995). The second more scientific type of knowledge management model views knowledge as an asset and connects the value to intellectual capital (Wiig, 1997). The third type of knowledge management views knowledge as subjective and the model focuses on the creation of knowledge within the organization (Demarest, 1997). Which model an organization chooses to use depends upon the organization’s strategic needs (Aliaga, 2000). Thus, there exist many different models of knowledge management for many different needs.

Categorized Knowledge Management

One of the earliest knowledge management models created by Boisot (1987) categorized knowledge into four basic groups as demonstrated in Table 1 below. The first group was codified knowledge which encompassed knowledge that could be packaged for transmission and could have two states, diffused or undiffused. Codified-diffused knowledge was considered public information. Codified-undiffused knowledge was private or proprietary information shared with only a select few. Uncodified knowledge was knowledge that is difficult to package for transmission. Uncodified-undiffused knowledge was personal knowledge. Uncodified-diffused was considered common sense knowledge. The author explained that common sense knowledge develops through the social interactions where individuals share their personal knowledge. The author also pointed out that codified and uncodified are unique categories of knowledge.

Table 1:

Boisot’s Knowledge Category Model

-

| Uncodified |

Common Sense |

Personal Knowledge |

| Codified |

Public Knowledge |

Proprietary Knowledge |

|

Diffused |

Undiffused |

The knowledge management model created by Nonaka and Takeuchi (1995) defined two forms of knowledge in their model: tacit and explicit knowledge. They explained that explicit knowledge is knowledge that is shared in some way and gathered into some storage device, such as a book or computer program, which makes it easy to share with others. Tacit knowledge was explained as internal to a person, somewhat subjective, useful only in a specific context, and difficult to share, as it exists only within the mind of the individual. The authors explained that tacit knowledge could be shared through socialization: social interactions, either face to face or in a shared group experience by members of an organization. This knowledge could become explicit knowledge throughexternalization when it is formalized into the information systems of the organization. Explicit knowledge can then be compiled and mixed with other existing knowledge through a process callcombination. Likewise, explicit knowledge could become tacit through a process of internalization, which happens, for example, when members of the organization are trained on how to use a system. When all of these different modes of knowledge transfer work together they create learning in what the author calls “the spiral of knowledge” (p. 165). Through iterations of learning starting at the individual, and spiraling up into the group and eventually the organization, knowledge accumulates and grows which leads to innovation and learning (Nonaka, 1991).

Table 2:

Nonaka’s Knowledge Management Model

-

| Tacit to Tacit → Socialization |

Tacit to Explicit → Externalization |

| Explicit to Tacit → Internalization |

Explicit to Explicit → Combination |

When comparing and contrasting these two categorical models, it is easy to see some similarities. The tacit and explicit categories from Nonaka (1991) are somewhat similar to the codified and uncodified knowledge categories defined by Boisot (1987). Another similarity is that tacit and explicit, codified and uncodified knowledge categories are considered unique by both authors. Also, both authors mentioned that their models include a sharing of knowledge, which moves knowledge from the person to the larger group. One place the two models differ greatly is that Nonaka (1991) is much more explicit about the idea of collecting knowledge and creating new knowledge through the knowledge spiral. McAdam and McCreedy (2000) criticized these models as too mechanistic and explained that they lacked a holistic view of knowledge management.

Intellectual Capital as Knowledge Management

The Skandia firm developed a scientific model of knowledge management to help measure their intellectual capital. According to Wiig (1997), this tree-like model treats knowledge as a product that can be considered an asset to the organization. The knowledge or intellectual capital has several categories: (1) human, (2) structural, (3) customer, (4) organizational, (5) process, (6) innovation, (7) intellectual and (8) intangible. The value assigned to each of these categories tells the organization their future capabilities. The author defined each of the categories as:

-

Human capital is the level of competency for the employees.

Structural capital is the collection of all intellectual activities of the employees.

Customer capital is the value of the organization’s relationships with their customers.

Organizational capital is the knowledge embedded in processes.

Process capital is the value creating processes.

Innovation capital is the explicit knowledge and inscrutable knowledge assets.

Intellectual property is documented and captured knowledge.

Intangible assets are the value of immeasurable, but important items.

When comparing the Skandia model against the Nonaka and Takeuchi (1995) model, it is possible to see the two models share the concept of explicit knowledge, defined as innovation capital within the Skandia model. Although the concept of tacit knowledge is not directly mentioned, Wiig (1997) explained that tacit knowledge that is transferred to explicit to be of lasting value compares to customer capital being transferred to innovation capital in the Skandia model. A study by Grant (1996) criticized the Nonaka and Takeuchi (1995) model because it was based in the context of new product development, whereas the Skandia model would also work for existing products.

Social Construction Model

The last model of knowledge management presented here was created by Demerest (1997) and focused on the creation of knowledge within the context of the organization. The author contends that all organizations have a knowledge economy and in general operate in about the same way. This includes an understanding that commercial knowledge is not truth; instead it is what works for the situation to produce knowledge that leads to an economic gain. One of the primary assumptions of this model is that the knowledge creation process happens through interactions between members of the organization. The author borrowed several concepts and adapted a model created by Clark and Staunton (1989) which includes the four following phases: (1) construction, (2) dissemination, (3) use, and (4) embodiment. The author defined construction as discovering or structuring some knowledge, embodiment as the process of selecting a container for the new knowledge, dissemination as the sharing of this new knowledge and use as creation of commercial value from the new knowledge. The author further explained that process could flow through all four steps in sequence, or happen simultaneously along a few different paths such as construction to use and construction to dissemination.

Figure 2

The Demarest Knowledge Management Model

The social construction model seems to be the best of all the other models. When comparing and contrasting this social construction model to the categorical models of Nonaka and Takeuichi (1995) and the Boisot (1987) model, they share (1) the concept of knowledge creation as powered by the flow of information within the organization, (2) the concept that knowledge creation happens between the members of the organization, and (3) include a sharing of knowledge which moves knowledge from the person to the larger group. According to McAdam and McCreedy (2000), this model differed from the categorical and intellectual capital models because the author included the idea that knowledge is inherently connected to the social and learning processes within the organization, and “knowledge construction is not limited to scientific inputs but includes the social construction of knowledge” (p. 6). Therefore this model brings together the best parts of all the other models.

These knowledge management models span a wide range of perspectives on the definition of knowledge management. The categorical models shared the concept of tacit and explicit knowledge. The intellectual capital model considered knowledge as an asset to be managed efficiently to make an organization successful. The social construction model linked knowledge to the social interactions and learning processes within the organization. The progression of models demonstrates that knowledge management continues to evolve. Grover and Davenport (2001) explained that the main purpose of knowledge management models is to help an organization grow their knowledge base and increase their competitive edge in the marketplace. Thus no one model is best to help an organization grow, but instead depends on the perception of the definition of knowledge.

The Role of Computer Technology in Knowledge Management

All the attention on knowledge management has lead to increased use of Information Technology (IT) to capture knowledge. Splender and Scherer (2007) explained that “the majority of KM consultants and business people see IT as KM’s principal armamentarium-it is all about collecting, manipulating, and delivering the increasing amounts of information ITs falling costs have made available” (p. 5). This opinion seems to resonate with Zack (1999b) who proposed a knowledge management strategy for transferring tacit knowledge to a storage format, thereby making it explicit. The author explained that this conversion process is commonly called codified knowledge. He also explained that this model uses IT as a pipeline to connect people to knowledge. Hansen, Nohria and Tierney (1999) proposed an additional strategy of knowledge management architecture that focused on dialog between individuals, thereby sharing tacit to tacit knowledge, which they called personalization. According to the authors this model uses IT to connect people to people and exchange tacit knowledge. Thus, the use of information technology to capture knowledge varies based on the organization’s competitive strategy.

The codified knowledge strategy according to Zack (1999b) is designed to capture knowledge, refine it into something usable, and then place it into a storage device, such as a document repository, where it is reusable by other members of the organization. The ability to store and reuse the knowledge whenever needed creates an economy of reuse, which helps to prevent the constant recreation of knowledge and therefore reduce costs (Cowan & Foray, 1997). According to Hansen et al. (1999), this knowledge strategy, which they call people-to-documents, comes with a significant investment cost for IT because of the need to sort and store large amounts of knowledge, now in data form.

Hansen et al. (1999) defined the personalization knowledge strategy as drawing on the relationships established between individuals in an organization wherein they share tacit knowledge. They further explained that this strategy created an economy of experts within the organization, which they called people-to-people. In contrast they explained that this model required a much smaller investment in IT infrastructure as much less knowledge is stored in any digital format, but instead stays in the minds of the employees.

Managerial Needs

Managers within an organization with a knowledge management strategy need different types of information about the technology used for the knowledge management system. According to research by Jennex and Olfman (2008) managers required multiple factors of measure to gauge the effectiveness and success of a knowledge management system. The managers needed to know about the information quality in the system, how well the users were adapting to using the software, and the overall performance of the knowledge management system. Massey Montoya-Weiss, and O’Driscoll (2002) explained that managers needed information not only about what is in the system, but how well the system was performing so they could assist in removing bottlenecks. Consequently, the information needed by managers, not only gauges the effectiveness of the knowledge management system, but also helps to make the system function smoothly.

Pitfalls

Using information technology to capture the knowledge of an organization might be detrimental if done improperly. In a paper by Johannessen, Olaisen and Olson (2001), the authors expressed much concern over the misuse of information technology to manage tacit knowledge within an organization. They argued that despite the empirical evidence to the contrary organizations continued to invest in IT systems that may lead to a loss of or at least a diminishing of the importance of tacit knowledge. Zack (1999b) explained that competitive performance requires a balance between tacit and explicit knowledge. Nonaka (1994) explained that knowledge within an organization was created by, and flows from the members of an organization engaging each other and sharing tacit and explicit knowledge. Scheepers, Venkitachalam and Giibs (2004) extended the research of Hansen et al. (1999) and concluded that an 80/20 mix of codification and personalization strategy, based on the competitive strategy of the organization was most successful. For these reasons, a balance between tacit and explicit knowledge must be maintained in the organization’s culture and IT infrastructure.

Web 2.0

The new technologies created in the Web 2.0 culture offer some new IT solutions to knowledge management. Web 2.0 technology functions more like the way individuals interact (O’Reilly, 2006). As previously stated, the codified knowledge strategy requires a significant IT investment not only in equipment but also specialists to gather and organize the knowledge (Hansen et al., 1999). According to Liebowitz (1999) one of the problems with traditional knowledge management technology is that it put the user in the role of passive receiver. Tredinnik (2006) in reference to Web 2.0 technology in knowledge management explained: “The technologies involved place a greater emphasis on the contributions of users in creating and organizing information than traditional information organization and retrieval approaches.”(p. 231). Chu, Miller and Roberts (2009) echoed this same concept when they explained that Web 2.0 technology puts the emphasis on the users generating new information or editing other participant’s work. According to Levy (2009) one of the advantages of Web 2.0 technology is that as individuals shared the knowledge they potentially assisted in the codification process. When they shared their tacit knowledge by posting it in an interactive Web 2.0 tool, such as a wiki, the knowledge began to move to explicit as others read, enhanced and categorized this knowledge, which moved it up from personal to organizational knowledge. Accordingly, web 2.0 technologies potentially offers many benefits, among them are more user participation creating more knowledge sharing, which helps keep the knowledge from becoming stale and lower costs as participants do more of the work.

Information technology for knowledge management and specifically capturing organization knowledge depends on the organization’s competitive strategy. The two strategies outlined here, codified and personalization knowledge strategy use information technology in different ways because the former builds a repository requiring significant IT investment and the latter creates a loose network of experts which requires a smaller IT investment. The experts warn that technology itself is not the answer and a real strategy with clear plans needs to be in place or the technology investment will not help the knowledge management process. There are some new Web 2.0 technologies on the horizon that could positively impact user participation in knowledge management technology and save money when codifying knowledge.

Knowledge Management Is Still Evolving

The approaches to knowledge management outlined here show a progression of thought. The models show a progression from a specific portion of knowledge sharing and an absolute definition of knowledge as truth (Boisot, 1987; Nonaka, 1991), to a wider and more generic perspective of sharing knowledge and a more subjective definition of commercial knowledge as truth (Demarest, 1997). Also, the information technology used to support the knowledge management strategy continues to evolve. Both the codified knowledge strategy of Zack (1999b) and the personalization knowledge strategy of Hansen et al. (1999) require technology to capture and share the knowledge. New Web 2.0 technology possesses a potential to change how much of an investment in technology the organization must make, as this technology is likely to increase the level of participation of the users making them more active in the knowledge management process. Therefore the only certainty of knowledge management is the continued growth and change of the models, strategies and technology.

References

Adomavicius, G., Bockstedt, J. C., Gupta, A., & Kauffman, R. J. (2008). Making sense of technology trends in the information technology landscape: A design science approach. MIS Quarterly,32(4), 779-809.

Alavi, M., & Leidner, D. E. (2001). Review: Knowledge management and knowledge management systems: Conceptual foundations and research issues. MIS Quarterly, 25(1), 107-136.

Albers, J., & Brewer, S. (2003). Knowledge management and the innovation process: The eco-innovation model. Journal of Knowledge Management Practice, 4(1).

Aliaga, O. A. (2000). Knowledge management and strategic planning. Advances in Developing Human Resources, 2(1), 91-104. doi:10.1177/152342230000200108

Allix, N. (2003). Epistemology and knowledge management concepts and practices. Journal of Knowledge Management Practice, 4(1), 136-152.

Argyris, C., & Schön, D. (1978). Organizational learning: A theory of action perspective. Reading, MA: Addison Wesley.

Baird, L., Henderson, J., & Watts, S. (1997). Learning from action: An analysis of the center for army lessons learned. Human Resource Management Journal, 36(4), 385-396.

Boisot, M. (1987). Information and organizations: The manager as anthropologist. London, UK: Fontana/Collins.

Carlsson, S. (2003). Knowledge managing and knowledge management systems in inter-organizational networks. Knowledge and Process Management, 10(3), 194-206. doi:10.1002/kpm.179

Chui, M., Miller, A., & Roberts, R. P. (2009). Six ways to make Web 2.0 work. The McKinsey Quarterly, 1-7.

Clark, P., & Staunton, N. (1989). Innovation in technology and organization. London, UK: Routleedge.

Cowan, R., & Foray, D. (1997). The economics of codification and the diffusion of knowledge.Industrial and Corporate Change, 6(3).

Demarest, M. (1997). Understanding knowledge management. Long Range Planning, 30(3), 374-384. doi:10.1016/S0024-6301(97)90250-8

Drucker, P. F. (1988). The coming of the new organization. Harvard Business Review, 66(1), 45-53.

Earl, M. (2001). Knowledge management strategies: Toward a taxonomy. Journal of Management Information Systems, 18(1), 215-233.

Garvin, D. A. (1993). Building a learning organization. Harvard Business Review, 71(4), 78-91.

Grant, R. (1996). Prospering in dynamically-competitive environments: Organizational capability as knowledge integration. Organization Science, 7(4), 375-387.

Grover, V., & Davenport, T. H. (2001). General perspectives on knowledge management: Fostering a research agenda. Journal of Management Information Systems, 18(1), 5-21.

Hansen, M. T., Nohria, N., & Tierney, T. (1999). What’s your strategy for managing knowledge?Harvard Business Review, 77(2), 106-116.

Jennex, M. E., & Olfman, L. (2008). A model of knowledge management success. In Current Issues in Knowledge Management (pp. 34-52). Hershey, PA: Information Science Reference.

Johannessen, J. (2001). Mismanagement of tacit knowledge: The importance of tacit knowledge, the danger of information technology, and what to do about it. International Journal of Information Management, 21(1), 3-20. doi:10.1016/S0268-4012(00)00047-5

Levy, M. (2009). WEB 2.0 implications on knowledge management. Journal of Knowledge Management,13(1), 120-134. doi:10.1108/13673270910931215

Liebowitz, J. (1999). Key ingredients to the success of an organization’s knowledge management strategy. Knowledge and Process Management, 6(1), 37-40.

Massey, A. P., Montoya-Weiss, M. M., & O’Driscoll, T. M. (2002). Knowledge management in pursuit of performance: Insights from nortel networks. MIS Quarterly, 26(3), 269-289.

McAdam, R., & McCreedy, S. (2000). A critique of knowledge management: Using a local constructionist model. New Technology, Work & Employment, 15(2), 155.

Nonaka, I. (1991). The knowledge-creating company. Harvard Business Review, 85(7/8), 162-171.

Nonaka, I. (1994). A dynamic theory of organizational knowledge creation. Organization Science, 5(1), 14-37.

Nonaka, I., & Takeuchi, K. (1995). The knowledge creating company: How Japanese companies create the dynamics of innovation. Oxford, UK: Oxford University Press.

O’Reilly, T. (2006). Web 2.0 compact definition: Trying again. O’Reilly radar. Retrieved January 24, 2011, from http://radar.oreilly.com/2006/12/web-20-compact-definition-tryi.html

Porter, M. (1980). Competitive strategy: Techniques for analyzing industries and competitors. New York: Free Press.

Prusak, L. (2001). Where did knowledge management come from? IBM Systems Journal, 40(4), 1002-1007.

Scheepers, R., Venkitachalam, K., & Gibbs, M. (2004). Knowledge strategy in organizations: refining the model of Hansen, Nohria and Tierney. The Journal of Strategic Information Systems, 13(3), 201-222. doi:10.1016/j.jsis.2004.08.003

Senge, P. (1994). The fifth discipline: the art and practice of the learning organization (1st ed.). New York: Doubleday/Currency.

Serban, A. M., & Jing Luan. (2002). Overview of knowledge management. New Directions for Institutional Research, 2002(113), 5.

Spender, J., & Scherer, A. (2007). The philosophical foundations of knowledge management: Editors’ introduction. Organization, 14(1), 5-28.

Tredinnick, L. (2006). Web 2.0 and business: A pointer to the intranets of the future? Business Information Review, 23, 228-234.

Wiig, K. (1997). Integrating intellectual capital and knowledge management. Long Range Planning,30(3), 399-405. doi:10.1016/S0024-6301(97)90256-9

Zack, M. H. (1999a). Developing a knowledge strategy. California Management Review, 41(3), 125-145.

Zack, M. H. (1999b). Managing codified knowledge. Sloan Management Review, 40(4), 45-58.

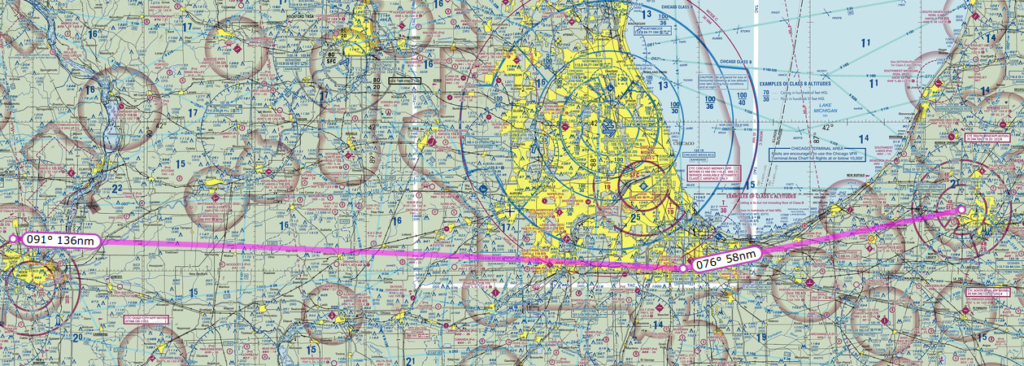

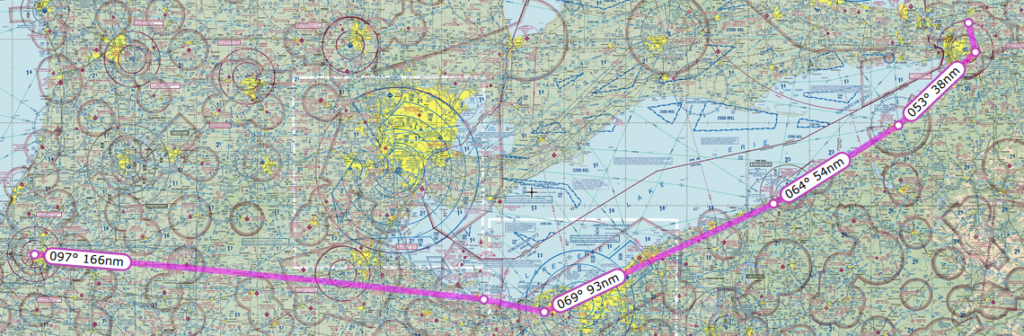

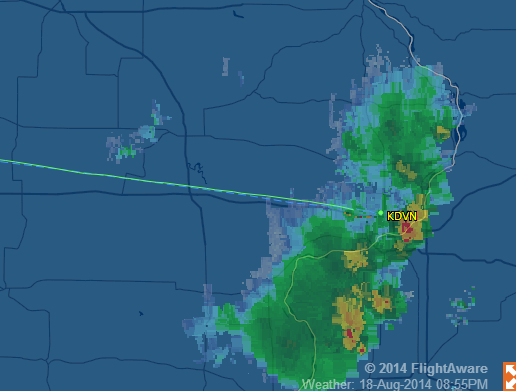

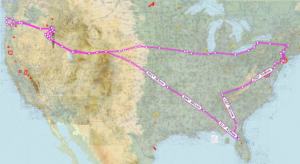

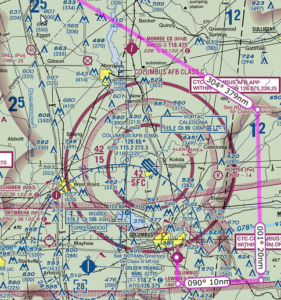

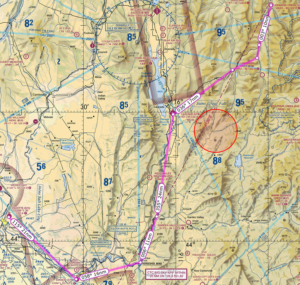

After refuel we took off and had to deal with the Class C airspace right next door. What a pain! instead of letting me turn west then north I had to turn south then east then north and go all the way around.

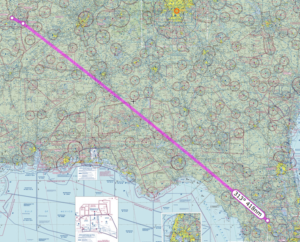

After refuel we took off and had to deal with the Class C airspace right next door. What a pain! instead of letting me turn west then north I had to turn south then east then north and go all the way around.  Quite frustrating but I understood afterward that had I gone east then north I would have ended up right in the middle of the approach path for incoming flights. We finally got back on track with flight following and continued to KMIO Miami Municipal Airport located in Miami Oklahoma. Quick note Miami is pronounced “my-am-a” at this particular location as it is a Native American word.

Quite frustrating but I understood afterward that had I gone east then north I would have ended up right in the middle of the approach path for incoming flights. We finally got back on track with flight following and continued to KMIO Miami Municipal Airport located in Miami Oklahoma. Quick note Miami is pronounced “my-am-a” at this particular location as it is a Native American word.

It swayed and rattled but ran just great and got us to dinner and our hotel just fine. We had a great dinner at Montana Mike’s and then cruised Historic Route 66 in the courtesy car. While on Route 66 we saw a bunch of restored landmarks including an old gas station, a theater and several shops along the way.

It swayed and rattled but ran just great and got us to dinner and our hotel just fine. We had a great dinner at Montana Mike’s and then cruised Historic Route 66 in the courtesy car. While on Route 66 we saw a bunch of restored landmarks including an old gas station, a theater and several shops along the way. The airport itself was located in the middle of acres of corn fields. The runway seemed to be made of a series of cement tiles connected together, which made my tires click out a tune as we landed. I picked this airport because it was on our route and had some of the least expensive 100 octane low-lead fuel, commonly called 100LL for the plane. Our Piper Cherokee burns about 8 to 10 gallons of fuel in an hour and goes through 40 gallons every four hours of flight. Finding less expensive spots to stop for fuel really saved us some cash.

The airport itself was located in the middle of acres of corn fields. The runway seemed to be made of a series of cement tiles connected together, which made my tires click out a tune as we landed. I picked this airport because it was on our route and had some of the least expensive 100 octane low-lead fuel, commonly called 100LL for the plane. Our Piper Cherokee burns about 8 to 10 gallons of fuel in an hour and goes through 40 gallons every four hours of flight. Finding less expensive spots to stop for fuel really saved us some cash.

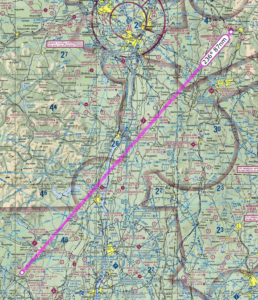

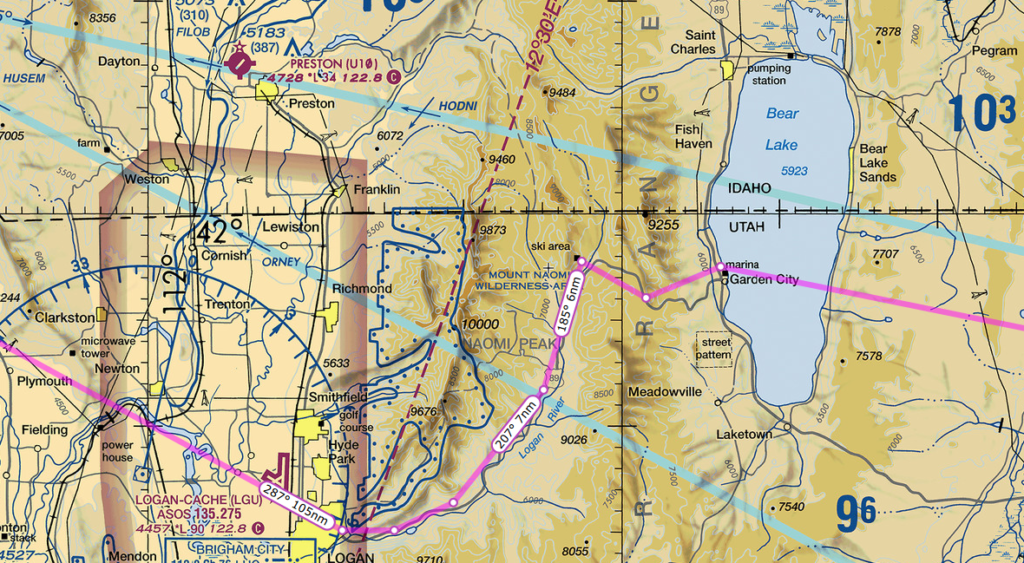

Took off from KRKS Rock Springs early morning and had a very slow climb out. Density Altitude (DA) was already 2000+ at 8:00 am. The field is 6765 feet so DA was 8765+ feet at takeoff. With full fuel and 60 lbs under max weight, we rolled down the runway almost 5000 feet and then climbed ever so slowly at 50-100 feet per minute (fpm). At sea level a normal climb for this plane even fully loaded is around 500-600 fpm. This poor climbing performance made me a bit nervous because the mountain ridge ahead of us was about 8300 feet high. Slowly got to 8500 to clear the mountain ridge, then lowered the nose and flight-climbed to 10,500 feet.

Took off from KRKS Rock Springs early morning and had a very slow climb out. Density Altitude (DA) was already 2000+ at 8:00 am. The field is 6765 feet so DA was 8765+ feet at takeoff. With full fuel and 60 lbs under max weight, we rolled down the runway almost 5000 feet and then climbed ever so slowly at 50-100 feet per minute (fpm). At sea level a normal climb for this plane even fully loaded is around 500-600 fpm. This poor climbing performance made me a bit nervous because the mountain ridge ahead of us was about 8300 feet high. Slowly got to 8500 to clear the mountain ridge, then lowered the nose and flight-climbed to 10,500 feet.

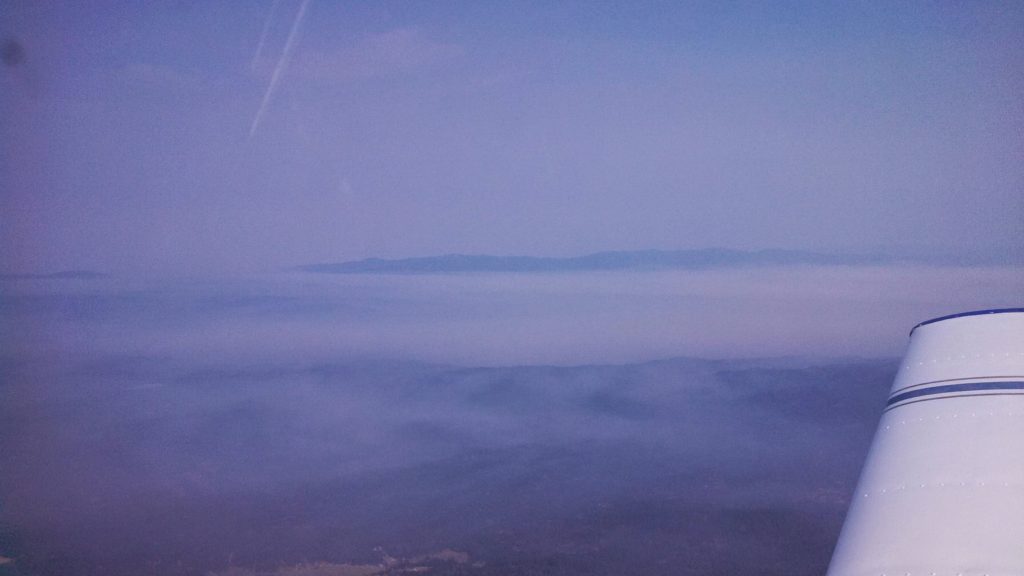

a bit of haze during the flight in that we later discovered was smoke. The breakfast menu is very simple. Yes or No. The food was fantastic. The breakfast consisted of eggs, pancakes sausage, home-made apple butt and piping hot coffee. We were able to sit outside and watch other planes come in and land on the runway.

a bit of haze during the flight in that we later discovered was smoke. The breakfast menu is very simple. Yes or No. The food was fantastic. The breakfast consisted of eggs, pancakes sausage, home-made apple butt and piping hot coffee. We were able to sit outside and watch other planes come in and land on the runway.